Installation¶

Raspberry Pi¶

Nimbus 3D is a time-of-flight camera for the Raspberry Pi that captures high-resolution 3D point clouds. The Raspberry Pi4 is recommended for the best experience. Prepared Raspberry OS images are available. If these are used, the following installation can be skipped.

nimbus-userland¶

The official Raspberry OS kernel includes an implementation for the Nimbus 3D. To use it, the corresponding embedded sources must be installed.

- Update to bleeding edge kernel

sudo rpi-update

- Add the Nimbus 3D apt repository

echo 'deb http://apt.pieye.org/debian/ nimbus-stable main' | sudo tee -a /etc/apt/sources.list

wget -O - -q http://apt.pieye.org/apt.pieye.org.gpg.key | sudo apt-key add -

sudo apt update

- Add the Imager to your boot config

sudo echo 'dtoverlay=irs1125' | sudo tee -a /boot/config.txt

- Install the nimbus-server or libnimbus-dev

sudo apt-get install nimbus-server

sudo apt-get install libnimbus-dev

- Reboot the Raspberry Pi

sudo reboot now

nimbus-web¶

Nimbus-web is the webinterface for the Nimbus 3D. There it is possible to see in real time the point cloud, depth images and intensity images. Furthermore it is possible to adjust the exposure and read the log. The source code is available here: Github.

- Install nginx and git

sudo apt-get install nginx git

- Clone the code

git clone https://github.com/pieye/nimbus-web.git

- Edit file /etc/nginx/sites-available/default (with sudo) and change line 41 from

root /var/www/html;

to

root /home/pi/nimbus-web;

- Restart nginx

sudo service nginx restart

- Open a browser with the IP address of your Raspberry Pi and you should see the webinterface.

nimbus-python¶

Nimbus-Python is the Python interface for the Nimbus 3D. Here it is possible to get the 3D data in Python within the local network. The source code is available here, but the package can also be installed directly via pip.

pip install nimbus-python

nimbus-ros¶

The Nimbus 3D can also provide data directly in ROS (Robot Operating System), which requires the installation of nimbus-ros. It is strongly recommended to use the finished image, because the process is quite time-consuming.

To use the low level c interface it is necessary to stop the nimbus-server. Check with the following command if it is running and stop it. For the next use of nimbus-python or nimbus-web the nimbus-server must be activated again.

sudo systemctl status nimbusServer.service

sudo systemctl stop nimbusServer.service

- Clone this Repository in the src folder of your ROS workspace.

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src

git clone https://github.com/pieye/nimbus-ros.git

2. To perform the following installation 4GB memory is required. If this is not available, the swap size must be increased accordingly:

sudo dphys-swapfile swapoff

sudo nano /etc/dphys-swapfile

- Change these lines CONF_SWAPSIZE=3000 CONF_MAXSWAP=4096

dphys-swapfile setup

sudo dphys-swapfile swapon

- Install ROS Melodic from Source manually OR run the following install script:

./nimbus-ros/scripts/install.sh

- Build the nimbus_3d_driver

cd ~/catkin_ws

catkin_make

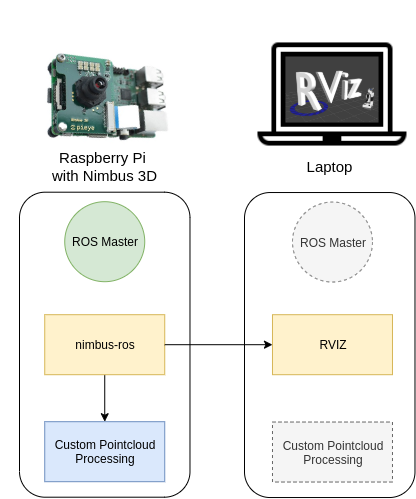

6. Configure ROS to run accros multiple machines The following diagram shows the possible architectures for using your Nimbus 3D. The ROS driver “nimbus_3d_driver” is running on the Raspberry Pi and publishes the pointcloud. In this guide the ROS master is also running on the Pi, but it could run on any other machine in your local network. The Pointcloud is afterwards visualized on another Computer with a Display connected e.g. Laptop. Your algorithms to process the captured data can run locally on your Raspberry or any other device in the local network.

We now configure ROS to run the master on the Raspberry and access the data via another machine running ROS Melodic with RVIZ installed Add this line to the .bashrc of your other machine (laptop), after adapting the IP to your Raspberry Pi if you are using Linux. You also need to add the IP of your local machine (ROS_IP):

nano ~/.bashrc

export ROS_MASTER_URI=http://192.168.1.1:11311

export ROS_IP=192.168.1.1

If you are using Windows you need to set it up as an enviroment variable:

Name: ROS_MASTER_URI Value: http://192.168.1.1:11311

Name: ROS_IP Value: 192.168.1.1

SSH into your Raspberry and run:

roscore

Start RVIZ on your machine:

rviz

It should start if everything works as expected.

7. Start the Nimbus ROS Driver The given launch file starts the nimbus node and a static coordinate transform after executing it on the Raspberry.

source devel/setup.bash

roslaunch nimbus_3d_driver nimbus.launch

It is possible to adjust the topics where the Pointcloud, Intensity Image, and Range Image are published. Simply set a new topic name in the launch file. This is necessary when using multiple Nimbus cameras in your local network at the same time.

nimbus-ros (optional)¶

Clock synchronization¶

Each pointcloud includes the timestamp of the initial image aquisition. If this is needed across devices, a clock synchronization protocal such as NTP should be used. PTP hardware timestamping is not available on the Raspberry Pi. Chrony is as often used tool for that task.

Configuration¶

It is possible to adjust the parameters that have an impact on the amount of transmitted data.

A 1GBit/s ethernet connection to the Raspberry Pi is highly recommended. If this is given you can launch the default configuration without making any changes. If you only have a 100MBit/s Interface you can load the given preset by changing the default.yaml to fast_ethernet.yaml in the launch file (launch/nimbus.launch). This will reduce the resolution! If you need to reduce the bandwitdh even further (e.g. wifi) but still need a reliable point cloud, you can replace the config against the low_bandwitdh.yaml This will heavily reduce the resolution! Furthermore it is possible to adjust the parameters to your own needs. Depending on the given setup it might be useful to adjust the auto exposure. If objects are moving fast or a minimum framerate should be achieved it can help do disable hdr and set a max value for the exposure time. The desired exposure can also be adjusted.

Furthermore it is possible to change the following parameters during runtime:

rosparam set /nimbus_3d_driver_node/XYZ_to_m [0.0 - 1.0]

rosparam set /nimbus_3d_driver_node/amplitude [0 - 3000]

rosparam set /nimbus_3d_driver_node/downsampling [true | false]

rosparam set /nimbus_3d_driver_node/downsampling_voxel_size [0.0 - 1.0]

rosparam set /nimbus_3d_driver_node/hdr_factor [0.0 - 1.0]

rosparam set /nimbus_3d_driver_node/exposure_mode [-1 (manual), 0 (default), 1 (Auto), 2 (HDR)]

rosparam set /nimbus_3d_driver_node/intensity_image [true | false]

rosparam set /nimbus_3d_driver_node/max_exposure [0 - 32766]

rosparam set /nimbus_3d_driver_node/pointcloud [true | false]

rosparam set /nimbus_3d_driver_node/range_image [true | false]